Now you can create cinematic videos with just a single sentence. For example, by entering the word “Jungle,” the movie scene immediately appears. 🌳🎥

Moreover, by incorporating several accompanying words related to “Jungle,” such as “river,” “waterfall,” “dusk,” “daytime,” and more, this AI instantly understands your intention. 🌊🌄

With just a single sentence, you can access high-resolution videos showcasing natural beauty, cosmic wonders, microscopic cells, and more.

Introducing Gen2, an AI video editing tool developed by Stable Diffusion and the tech company Runway behind the book “Multiple Universes: Damn!” 🚀📽️

And just recently, there’s great news—the Gen2 tool is now available for free trial!

As a result, netizens have started exploring this new experience eagerly. 😃🌐

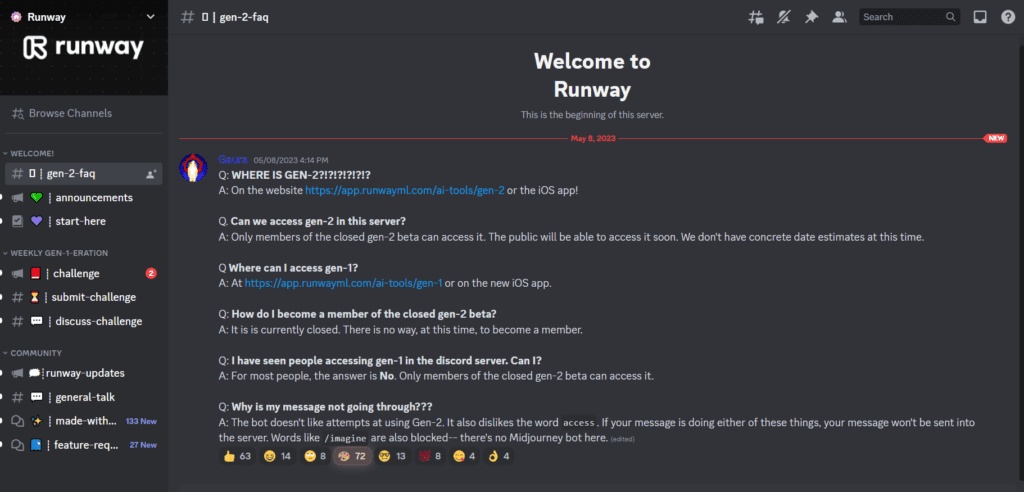

Join the Official Runway Gen-2 Discord for all Information!

Experience Gen2🎬

Editor’s Experience

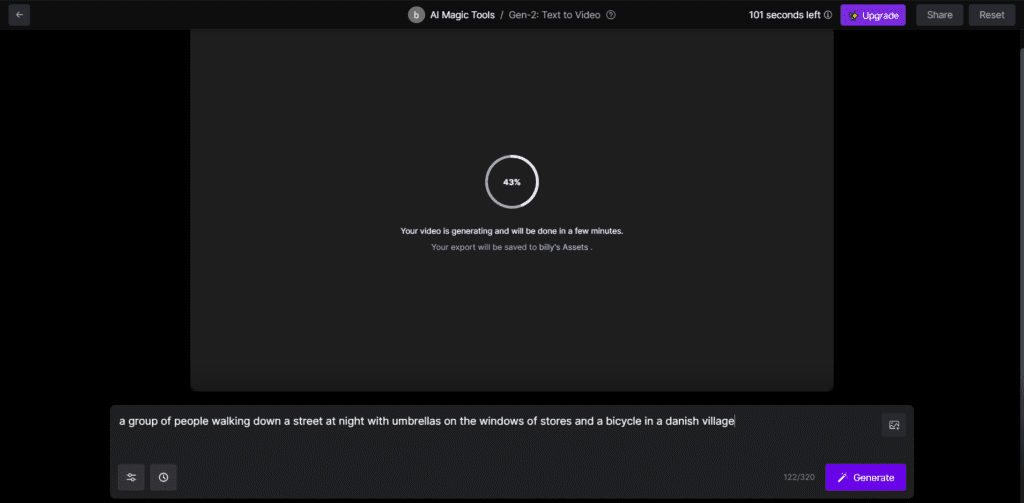

Such a fun technology definitely needs to be personally experienced. For example, by entering the sentence “A group of people walking down a street at night with umbrellas on the windows of stores and a bicycle in a danish village” in Gen2, a video clip of 4 seconds is instantly created.

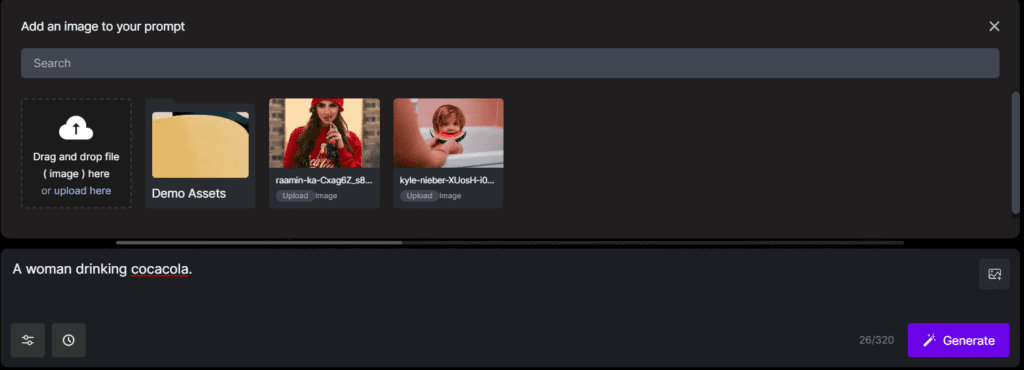

If you want to change the style of the video, you can also upload a picture. For example, we used a photo of “A kid eating watermelon in a bathtub”. Gen2 will then “merge” your input prompt with the photo style.

Editor’s opinion: Due to my lack of experience of using this kind of prompting tools…the generated video seems little bit scary and disturbing…Click to view if you are ready:

Currently, the free trial of Gen2’s capabilities on the Runway website is text-to-video generation, but Gen1 also offers video-to-video generation. For example, inspired by the movie “Ma’s Multiverse,” a foreign netizen can also enjoy thrilling time-travel adventures with Gen1.

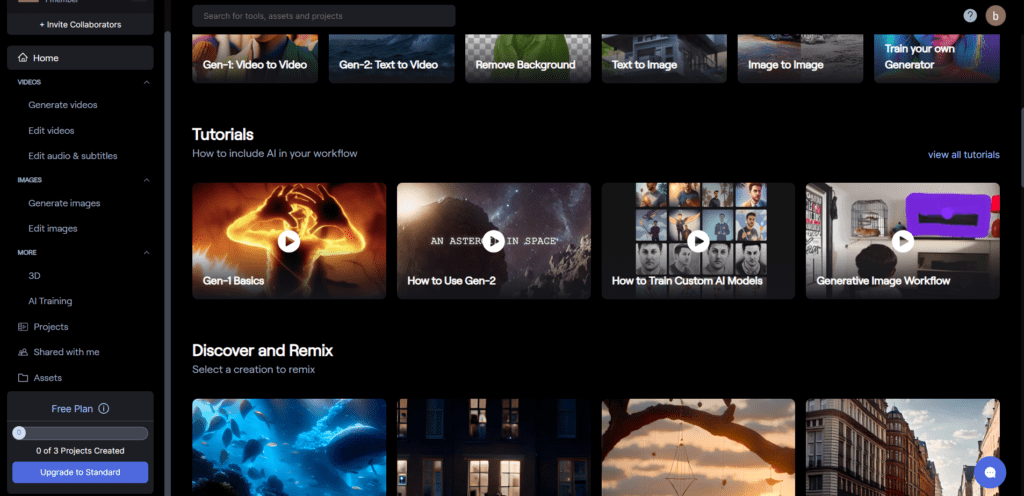

So right now there are several tools, tutorials and discover section for guiding users’ usage.

Currently, the Runway website offers a free experience of Gen2’s functionality, which is generating videos from text (Text to Video). However, Gen1 also allows video generation from videos (Video to Video).

AI Powdered Director’s Experience🎬

An international netizen, inspired by “Ma’s Multiverse,” was able to embark on an exciting adventure with Gen1.

First, he recorded a video of himself snapping his fingers at home, and in an instant, he found himself on the set of the European royal family:

Then… even species and gender can be easily switched:

Finally, after traversing different time periods and races several times, with a snap of his fingers, the guy returned to his own home:

After witnessing this impressive showcase generated by Gen2, netizens exclaimed in amazement:

PC and mobile compatible 🖥️📱

Both web and mobile (iOS only) versions are now officially available for experience.

Please note that the free trial quota is 105 seconds (remaining quota will be displayed in the upper right corner), with each video being 4 seconds long. In other words, you can generate approximately 26 Gen2 videos for free.

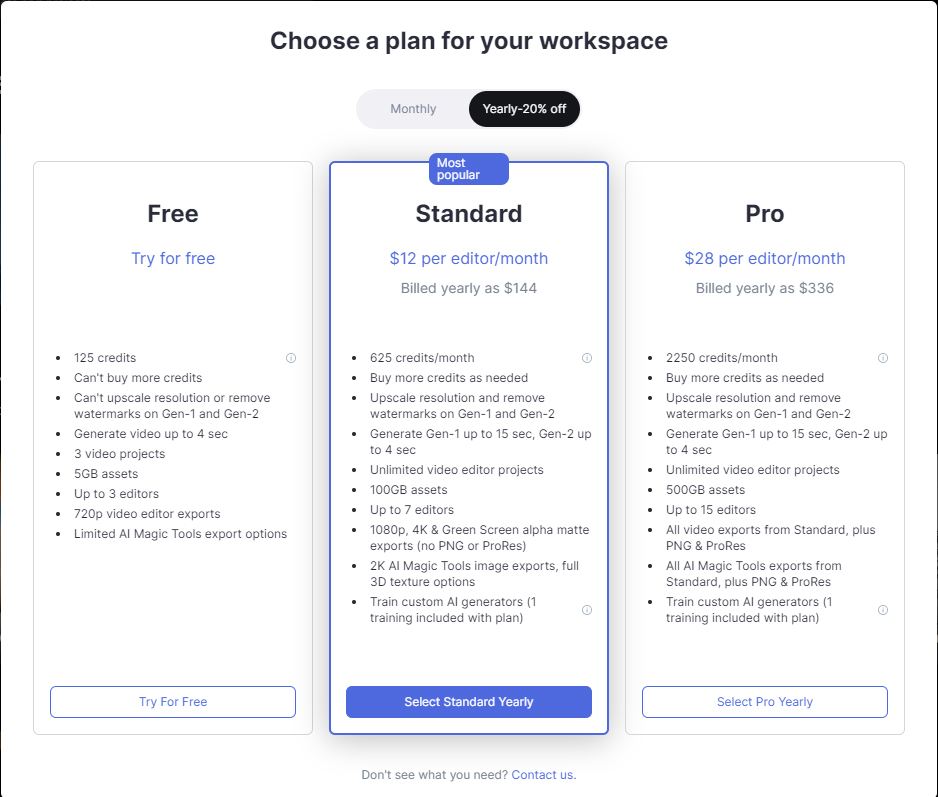

Once the quota is used up or if you want to remove the watermark, enhance resolution, and access additional features, you’ll need to join the premium membership. The Standard version costs $12 per month, while the Pro version is $28 per month. There is an 80% discount for an annual one-time payment.

If you want better results, you can use the format “in the style of xxx” more frequently, for example: “A palm tree on a tropical beach in the style of professional cinematography, shallow depth of field, feature film. A palm tree on a tropical beach in the style of 2D animation, cartoon, hand drawn animation.“

Alternatively, you can directly visit its inspiration library, choose a video you like, and click “try it” to see how its prompts are written. Then you can edit or imitate them on top.

Some users also mentioned that starting with “cinematic shot of” can make your videos more dynamic (solving the problem of many people’s videos being not very dynamic).

Gen-2’s Background

Gen2 was officially announced on March 20th this year, after more than two months of internal testing, and it has finally launched. Its predecessor, Gen1, was only released a little over a month earlier (in February), so it can be said that the iteration speed is quite fast.

As a diffusion-based generative model, Gen1 expanded the diffusion model to the field of video generation by introducing temporal layers in pre-trained image models and conducting joint training on image and video data.

This also includes the use of a new guidance method to achieve precise control over the temporal consistency of the generated results.

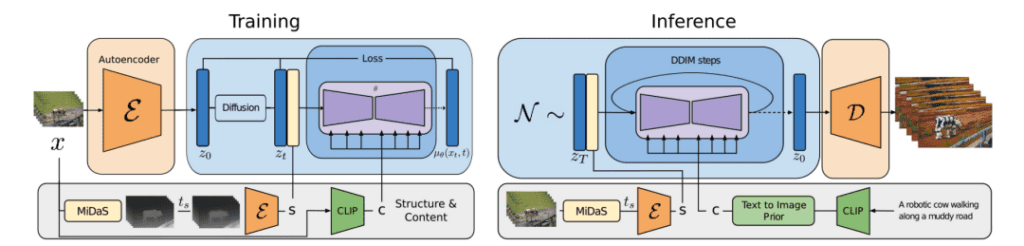

Its architecture is shown in the diagram:

During the training phase, the input video x is first encoded into z0 using a fixed encoder and then diffused to zt.

Then, the depth map obtained from MiDaS is encoded to extract structural representation s, and CLIP is used to encode one of the frames to obtain content representation c.

Next, under the guidance of s, the model learns to reverse the diffusion process in the latent space (where s is connected with c and c generated through cross-attention blocks).

During the inference phase, the model provides the same structural representation s of the input video.

To generate content through text, the author also transforms CLIP text embeddings into image embeddings using a prior.

Ultimately, Gen1 can generate finely controllable videos and customize them with reference images.

However, initially, the publicly released Gen1 could only edit existing videos, and it was Gen2 that directly achieved the “transformation” of generating videos from text.

Moreover, it introduced seven additional features at once, including text + reference image video generation, static image to video conversion, video style transfer, and more.

According to official survey data, Gen2 is indeed more popular among users: the user rating is 73.53% higher than Stable Diffusion 1.5 and 88.24% higher than Text2Live.

Now that it has officially launched, it has quickly attracted a wave of user experiences, and some people have expressed:

So, I wonder if this wave, along with the development of SD by Runway, can bring about the next major trend in generative AI field with Gen2.

Take a look at latest youtube video on Runway Gen-2