Apple unveiled its new glasses, Vision Pro, at WWDC2023. Sterling Crispin, a former Apple employee responsible for Vision Pro development, recently revealed on Twitter that the glasses incorporate extensive neuroscience to enhance key features.

Crispin stated, “During my time as a neural technology prototype researcher in Apple’s technical development team, I dedicated 10% of my life to contributing to the development of Vision Pro. It was the longest project I’ve ever worked on, and I feel proud and relieved to see it finally unveiled. I have been researching AR and VR for a decade, and in many ways, this is the culmination of the entire industry.”

It’s been over five years since I started working on this, and I spent a significant portion of my life on it, as did an army of other designers and engineers. I hope the whole is greater than the sum of the parts and Vision Pro blows your mind.

Sharing from Former Apple Vision Pro developer @sterlingcrispin

Take a look at WWDC2023:

Due to confidentiality agreements, Crispin couldn’t disclose too many details, but some information has been made public through patents. He explained that his work at Apple primarily involved assessing users’ psychological states in immersive mode based on their body and brain data.

“In other words, when users are experiencing mixed reality or virtual reality, the AI model attempts to predict whether they are feeling curious, distracted, scared, focused, recalling past experiences, or other cognitive states. These states can be measured through eye tracking, brain electrical activity, heart rate and rhythm, muscle activity, brain blood density, blood pressure, skin conductance, and other measurements,” he explained.

To achieve these specific predictions, they employed various techniques, some of which are described in the patents mentioned. One of the coolest achievements, according to Crispin, is the ability to predict what users will select before they even click. “Your pupils react before you click, partly because you anticipate what will happen after the selection.

By monitoring users’ eye behavior and making real-time adjustments to the user interface, you can create more anticipatory pupil responses through eye-building a rough brain-machine interface. It’s cool, and I would choose this method over invasive brain surgery,” he compared the technology to “mind reading.”

Furthermore, to determine users’ cognitive states, the system quickly presents them with visual or auditory signals that they cannot consciously perceive. “Another patent details how machine learning and signals from the body and brain can be used to predict how focused, relaxed, or effective one’s learning is. Then, the virtual environment is updated based on these states. So, imagine an adaptive immersive environment that helps you learn, work, or relax by adjusting based on the changes in what you see and hear,” said Sterling Crispin.

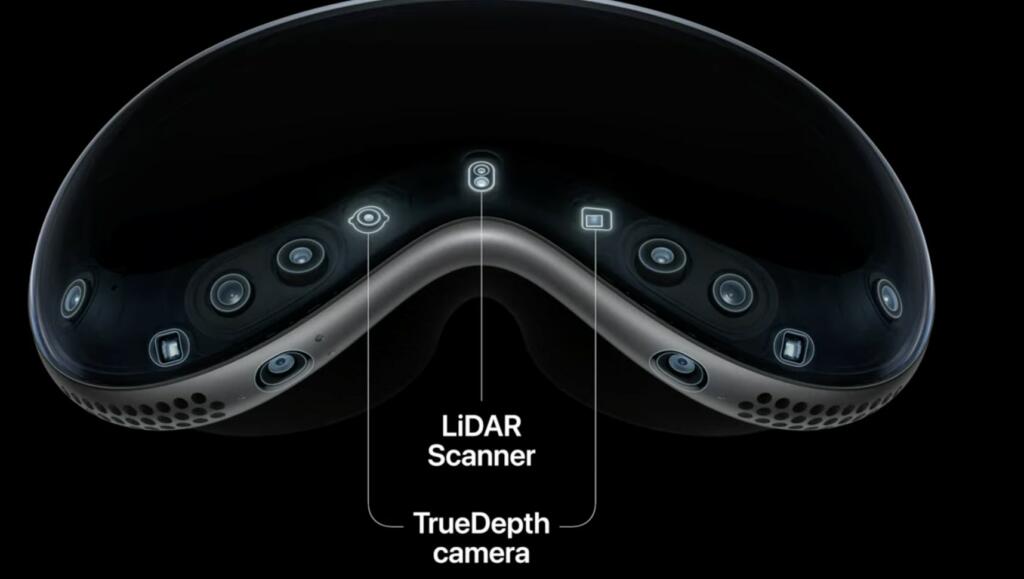

Apple previously stated that they created 5,000 new patents during the development of this head-worn device. Their main goal is not to completely immerse users in virtual reality but to expand users’ possibilities by combining the real and digital worlds.

See @sterlingcrispin’s sharing on Twitter: